Within ULM-2, Emiel van Miltenburg focusses on the perception of images and sounds.

If we ever want to communicate with computers, it is essential that they understand the close ties between the language we use and the world around us. In this second Spinoza project, we study how people talk about sounds and images, in order to understand what it takes for machines to do the same. In this work, we emphasize the role of world knowledge and everyday expectations. When you ask people to describe a sound or an image, people rarely take those sounds and images at face value. Rather, they start to interpret and (re-)contextualize whatever is presented to them. One of the challenges in this project is to show how the language people use to describe sounds and images can be traced back to their perspective on the world.

|

||

|

Picture of a herd of sheep, with a shepherd and a mule, in a rural landscape.

Picture of sheep overlaid with eye-tracking data.

|

||

| Photo by Jacinta Lluch Valero (CC BY-SA 2.0) | ||

The Dutch Image Description and Eye-tracking Corpus (DIDEC) contains 307 images from the MS COCO dataset, provided with eye-tracking data and spoken descriptions.

- The VU Sound Corpus

This dataset contains a large amount of sound effects that participants tried to name in an online experiment. This gives us an idea of the vocabulary that people use to describe sounds. Furthermore, our data shows our limited capability to recognize sounds without knowing their context.Link: https://github.com/CrowdTruth/VU-Sound-Corpus

Navigation tool: https://github.com/evanmiltenburg/SoundBrowser - Negations in Flickr30K

This dataset contains image descriptions containing negations (words like no, nothing, without). We categorized these descriptions to see why people use negations in their descriptions.Link: https://github.com/evanmiltenburg/annotating-negations

|

Selected publications

- E. van Miltenburg, “Stereotyping and bias in the flickr30k dataset,” in Proceedings of multimodal corpora: computer vision and language processing (mmc 2016), 2016, pp. 1-4.

- E. van Miltenburg, B. Timmermans, and L. Aroyo, “The vu sound corpus: adding more fine-grained annotations to the freesound database,” in Proceedings of the ninth international conference on language resources and evaluation (lrec 2016), Portorož, Slovenia, pp. 26-31 2016.

- A. Lopopolo and E. van Miltenburg, “Sound-based distributional models,” in Proceedings of the 11th international conference on computational semantics, London, UK, 2015, pp. 70-75.

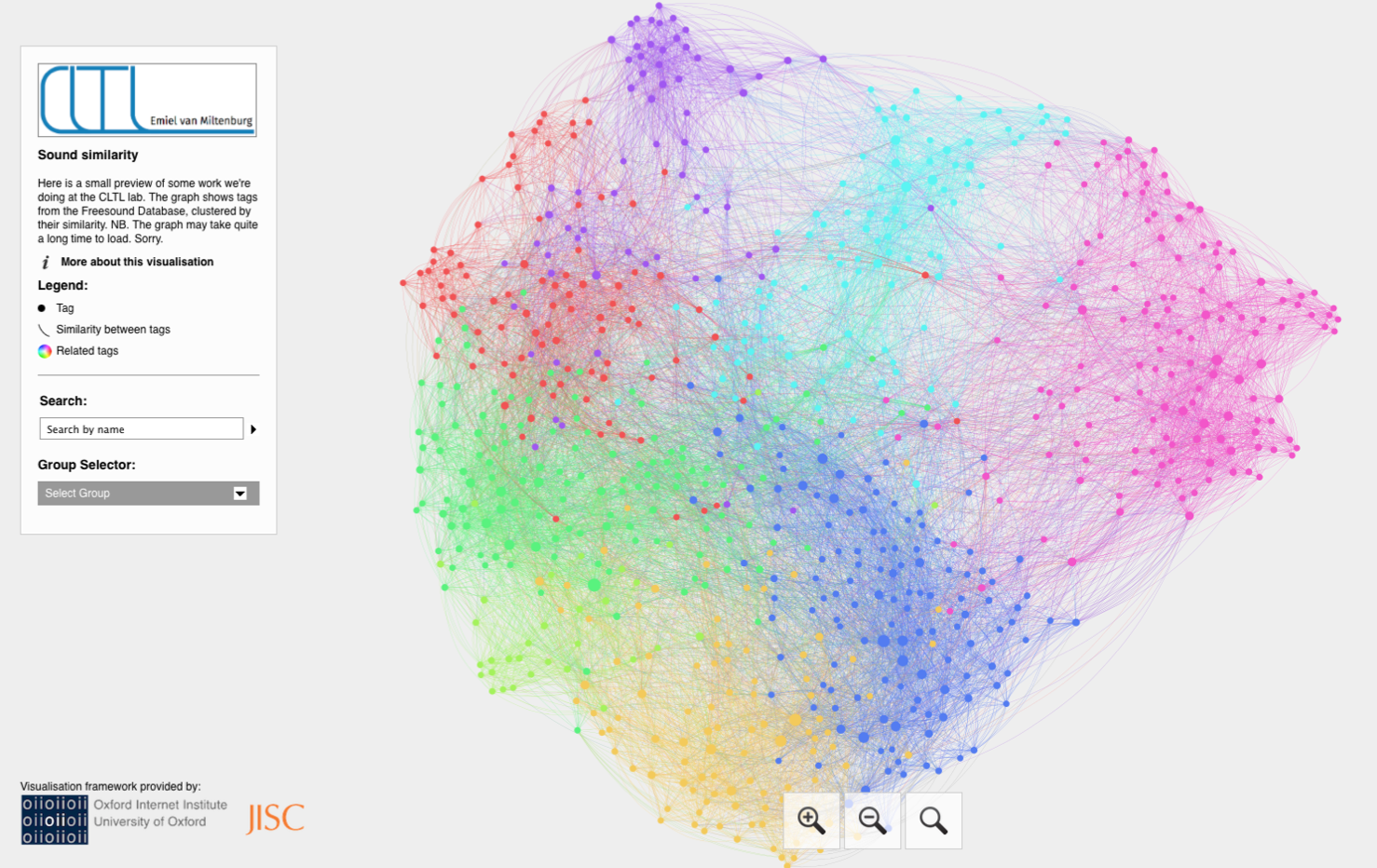

Sound similarity by Emiel van Miltenburg. The graph shows tags from the Freesound Database, clustered by their similarity.

Sound similarity by Emiel van Miltenburg. The graph shows tags from the Freesound Database, clustered by their similarity.